By Blake Anderson, CFA®, Associate Portfolio Manager

“Demand for AI compute is skyrocketing.” ~Jensen Huang at Consumer Electronics Show 2026

This year’s Consumer Electronics Show (‘CES’) might as well have been called the ‘Nvidia Show-off Show’ in my opinion. Nvidia used the opening night keynote address to introduce its newest chip family – Rubin – and detail how thinking models are driving AI demand. More than just chips and hardware, Nvidia is growing to become a model and software company that aims to power the entire stack of next generation technology.

Welcome, Rubin

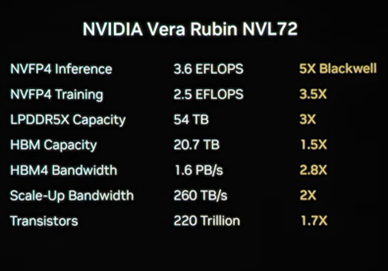

The Rubin family of GPUs is Nvidia’s latest generation of chips. Set to launch later this year, Rubin promises to continue the torrent pace of performance improvements Nvidia has become known for. Shown below, the company claims that Rubin will be capable of 5x the inference performance as its previous chip – Blackwell – and deliver up to 3.5x the training performance. Considering that Blackwell delivered roughly 3x performance to its predecessor – Hopper – this increase in performance gain is astounding.

Jensen Huang noted “Vera Rubin arrives just in time for the next phase of AI” – thinking.

Stay on Top of Market Trends

The Carson Investment Research newsletter offers up-to-date market news, analysis and insights. Subscribe today!

"*" indicates required fields

Remember DeepSeek? Thinking Has Exploded

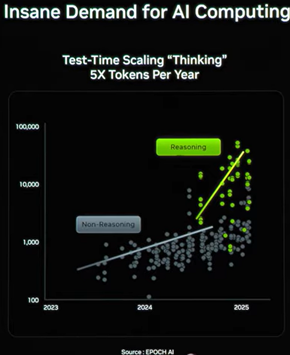

It was just under a year ago – January 27th, 2025 – that the world woke up to DeepSeek’s reasoning capabilities. Although Nvidia stock declined -17% on that day, investors evidently missed that DeepSeek’s breakthrough ushered in a whole new era of AI-compute dubbed “Thinking.” In the near year since that day, thinking models have taken over compute demand. Nvidia revealed at CES that ‘Thinking’ is both the biggest usage of AI compute (as opposed to non-reasoning models), and that ‘thinking’ token consumption is growing materially faster than other workloads (at nearly 5x per year!)

Models capable of thinking have set the stage for what Nvidia sees as AI growing beyond just LLMs.

Shifting Focus

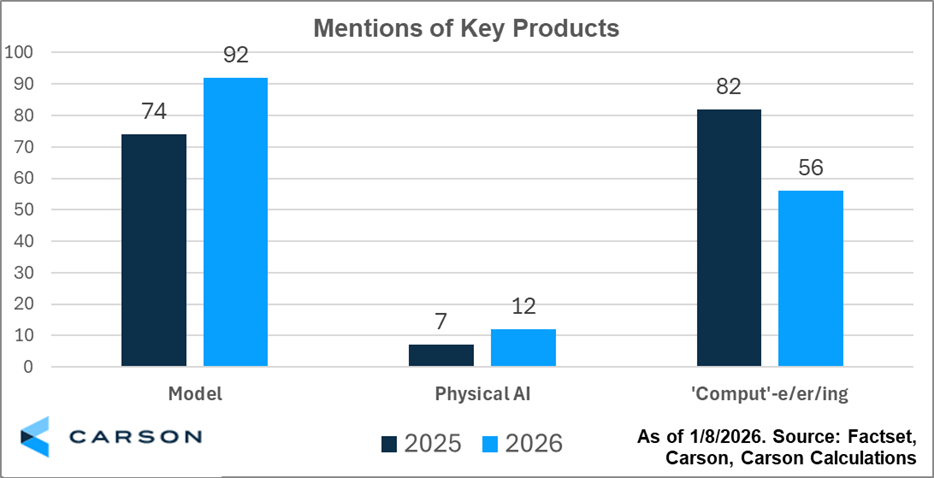

What might have caught investors most off-guard is Nvidia’s shift in focus from hardware (such as GPUs and other chips) to models and software. Shown below, transcript analysis of Nvidia’s keynote address this year compared to last year depicts a jump in mentions of “Model” and “Physical AI,” and shows that there is a notable drop in the mentions of “’Comput’-e/er/ing.” To me, this signals that Nvidia is thinking about applications of AI beyond just core hardware performance increases.

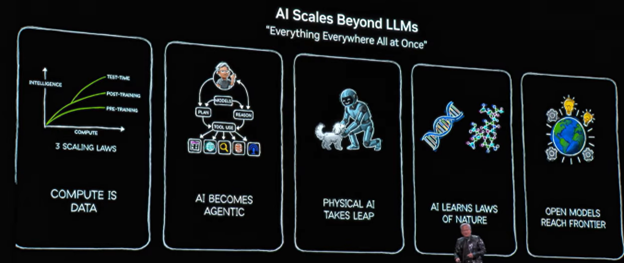

Jensen Huang used nearly half the presentation to detail five ways ‘AI is Scaling Beyond LLMs.’ While ‘compute is data’ (and will remain necessary), it was clear Mr. Huang is focusing on the uses of AI rather than just making a more performant product. Nvidia sees many emerging uses of AI – pictured below – such as Agents, Physical AI, broadening simulations of nature, and cultivating open-source models to spur creativity. It’s clear Nvidia is thinking about the use cases of its chips.

Nvidia stole the show at CES 2026. And rightfully so. The Rubin family of chips promises an even bigger leap in performance than their previous chip delivered. These performance gains may help power Reasoning models – which have quickly become one of the biggest consumers of AI – expand beyond the chat bots we’ve all become familiar with. And Jensen Huang, always peering around the corner at what’s to come, is increasing focus on the real-world impacts AI can deliver such as agents and physical AI applications.

- Cover image courtesy of TheStreet https://www.thestreet.com/investing/what-nvidia-just-did-could-rewire-the-ai-race

- All slides pictured courtesy of Nvidia https://www.youtube.com/watch?v=0NBILspM4c4

For more content by Blake Anderson, CFA®, Associate Portfolio Manager click here.

8704372.1. – 12JAN26A