Nvidia hosted its annual GPU Technology Conference (“GTC”) this past week, showcasing its latest technology. This event marked the company’s first opportunity to address investor concerns following the DeepSeek-driven drawdown which raised questions about future demand for computing. Nvidia unequivocally painted a bullish picture, emphasizing how large language models (“LLMs”) have evolved significantly in recent months. Recent breakthroughs, the company suggested, could serve as catalysts for further growth in computing. To help meet this anticipated demand, Nvidia introduced its latest Blackwell chips, designed to deliver a generational boost in computing power.

Shares of Nvidia have lagged the broader market recently, as investors remain uncertain about the outlook for the demand of computing power. DeepSeek’s discovery – which my colleague Sonu Varghese, VP, Global Macro Strategist, wrote about – upended expectations about the trajectory of LLM development. If investors had a grasp on how to value Nvidia by projecting forward LLM-driven demand, the negative reaction in Nvidia shares since the discovery reflect either increased uncertainty or outright negative revisions in expected demand. At GTC, Nvidia sought to dispel these concerns and instill confidence in its growth expectations to investors.

Stay on Top of Market Trends

The Carson Investment Research newsletter offers up-to-date market news, analysis and insights. Subscribe today!

"*" indicates required fields

In what was perhaps Nvidia’s clearest rebuttal to the ‘DeepSeek’ selloff fears thus far, CEO Jensen Huang provided a detailed comparison of traditional LLMs versus next-generation reasoning models, illustrating how computing demands may actually increase. ‘Traditional’ LLMs, such as earlier versions of ChatGPT, generate text one word at a time based on what seems most contextually appropriate. By contrast, reasoning models continuously “check their work,” verifying whether their responses are both contextually sound and logically accurate.

To illustrate this distinction, Mr. Huang presented a real-world example comparing the compute requirement of traditional LLMs versus reasoning-based LLMs. He posed the following prompt to each:

“I need to seat 7 people around a table at my wedding reception, but my parents and in-laws should not sit next to each other. Also, my wife insists we look better in pictures when she’s on my left, but I need to sit next to my best man. How do I seat us on a round table? But then, what happens if we invited our pastor to sit with us?”

As shown below, the traditional LLM correctly handled the first requirement – separating parents and in-laws – but failed to correctly fulfill the remainder of the conditions, as it lacked capability to verify its work. In contrast, the reasoning model correctly generated a seating arrangement that met all criteria. However, achieving this level of accuracy required nearly 20 times more ‘compute tokens.’ If investors were concerned that DeepSeek’s discovery might dampen computing demand, a 20x increase in compute requirements for next-generation reasoning LLM models may alleviate those fears.

Slide image courtesy of Nvidia

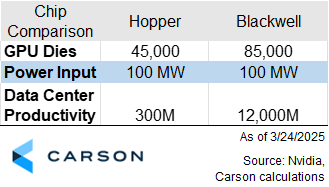

Nvidia aims to capture this increase in compute demand by launching the industry’s most powerful products to date. The company’s newest chip, Blackwell, is designed based on customer feedback and technology innovation to enable more efficient AI model training and execution. Nvidia claims that Blackwell can deliver 40 times more token generation capacity while maintaining the same power input. According to company data, data centers currently standardized on the Hopper architecture can generate 300 million tokens per second (defined as “Data Center Productivity” in the table below). In contrast, the same data centers, if upgraded to Blackwell, could generate up to 12 billion tokens per second while keeping power consumption stable. This means data center operators facing supply constraints could immediately see a boost to productivity by transitioning to Blackwell. In my opinion, product innovation like this keeps Nvidia ahead of its chip competitors.

Nvidia’s latest GTC keynote was a strategic effort to reassure investors that the outlook for advanced computing remains strong, even amid evolving LLM technologies. By demonstrating that reasoning-based LLMs demand substantially more computing power to generate accurate responses, the company reinforced the case for continued reliance on its products. Moreover, Nvidia is not standing still. It’s latest product, Blackwell, offers a significant leap in compute power compared to the current generation of chips, helping customers enhance data center productivity and further solidifying the company’s competitive edge.

For more content by Blake Anderson, CFA®, Associate Portfolio Manager click here.

7777752-0325-A